User Interface

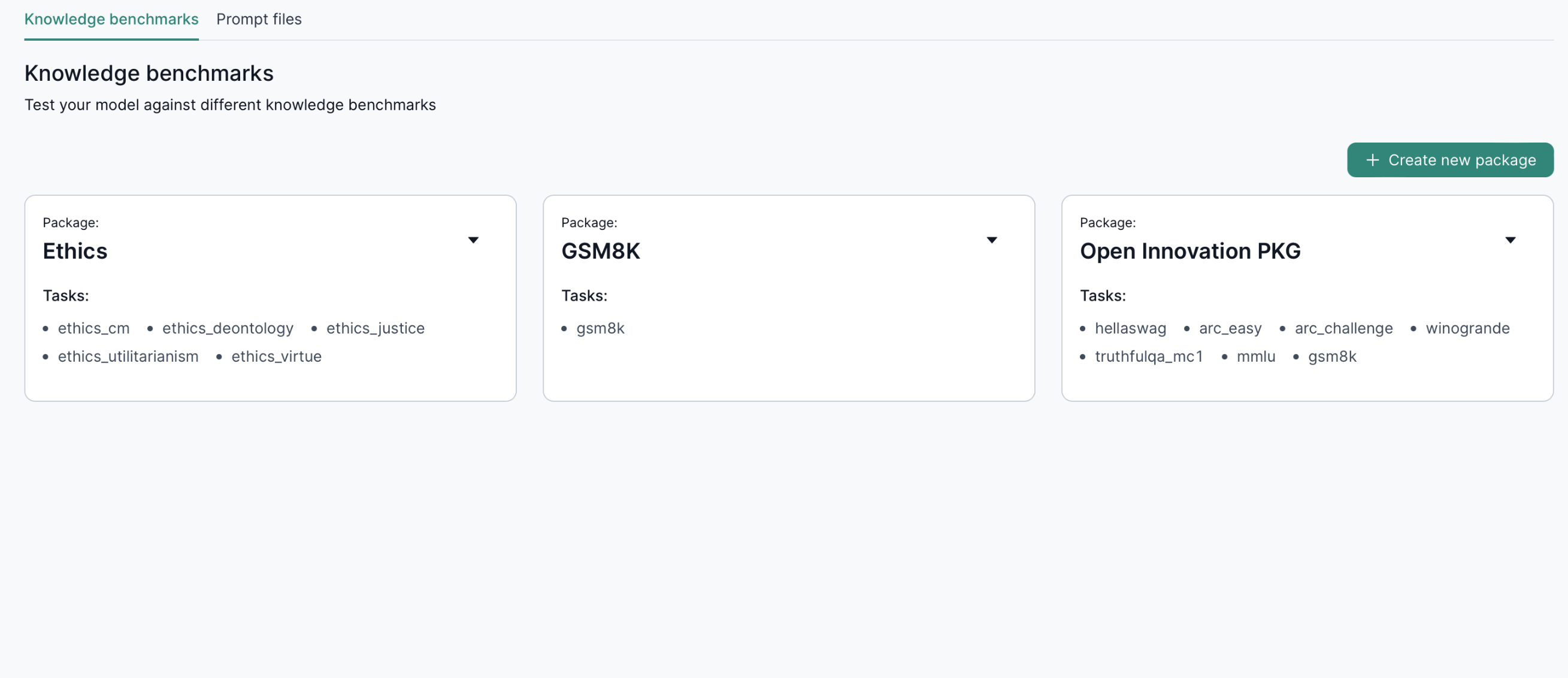

Upon selecting Benchmarks from the sidebar menu bar, you will be directed to the benchmarks homepage, which consists of a set of packages. Each package is simply a set of benchmarks.

Benchmarks

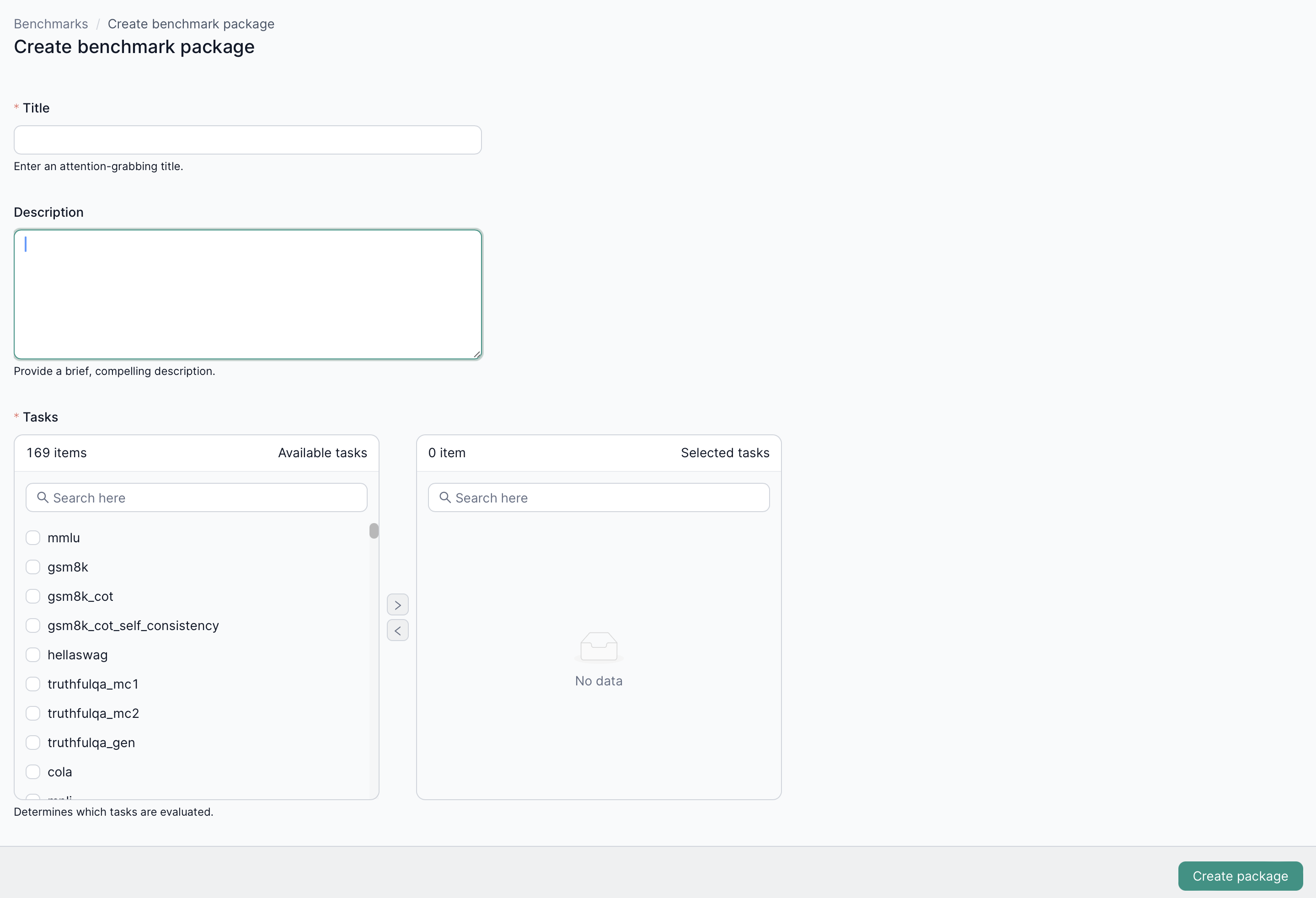

Create a new benchmark package

By clicking on the Create new package button on the UI interface, users can streamline the process of creating a new benchmark package. This feature guides users through inputting essential details such as the name of the package and the tasks to be evaluated.

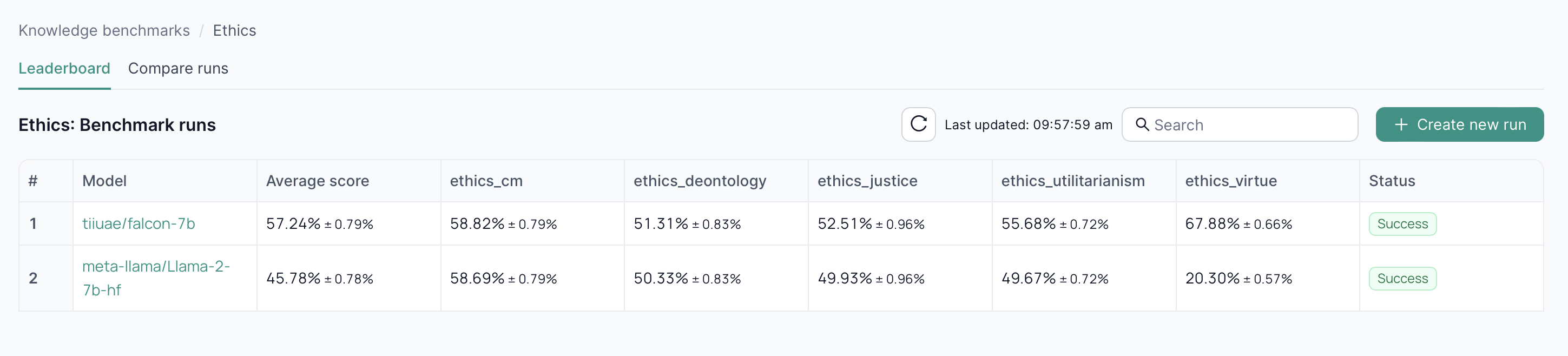

Benchmark runs

After selecting a benchmark package, you'll be directed to the benchmark runs page. Here, you can view all available runs for this package.

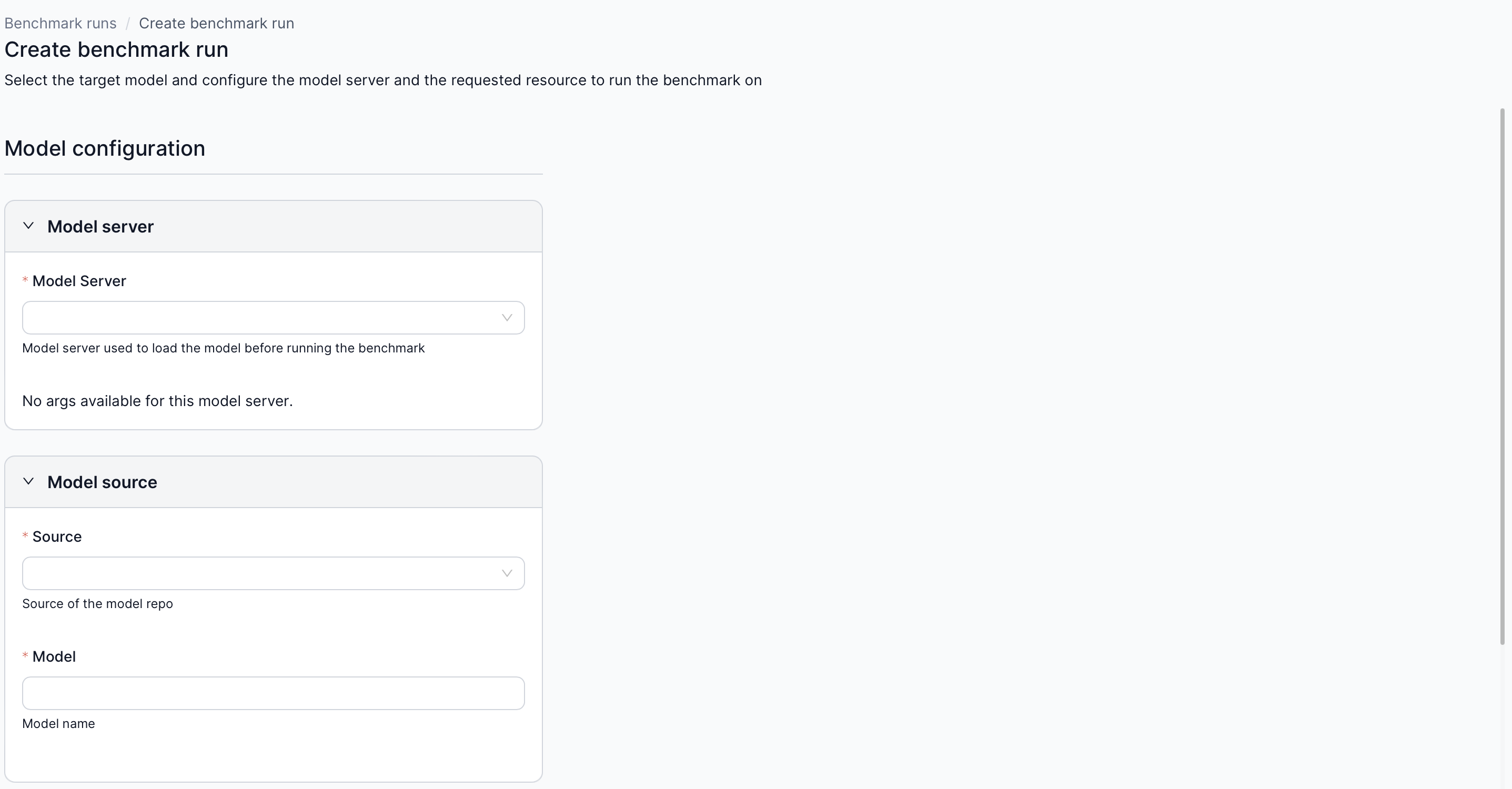

Create a new run

To create a new run, click on Create new run you need to specify both the model and the resources required to run your benchmark.

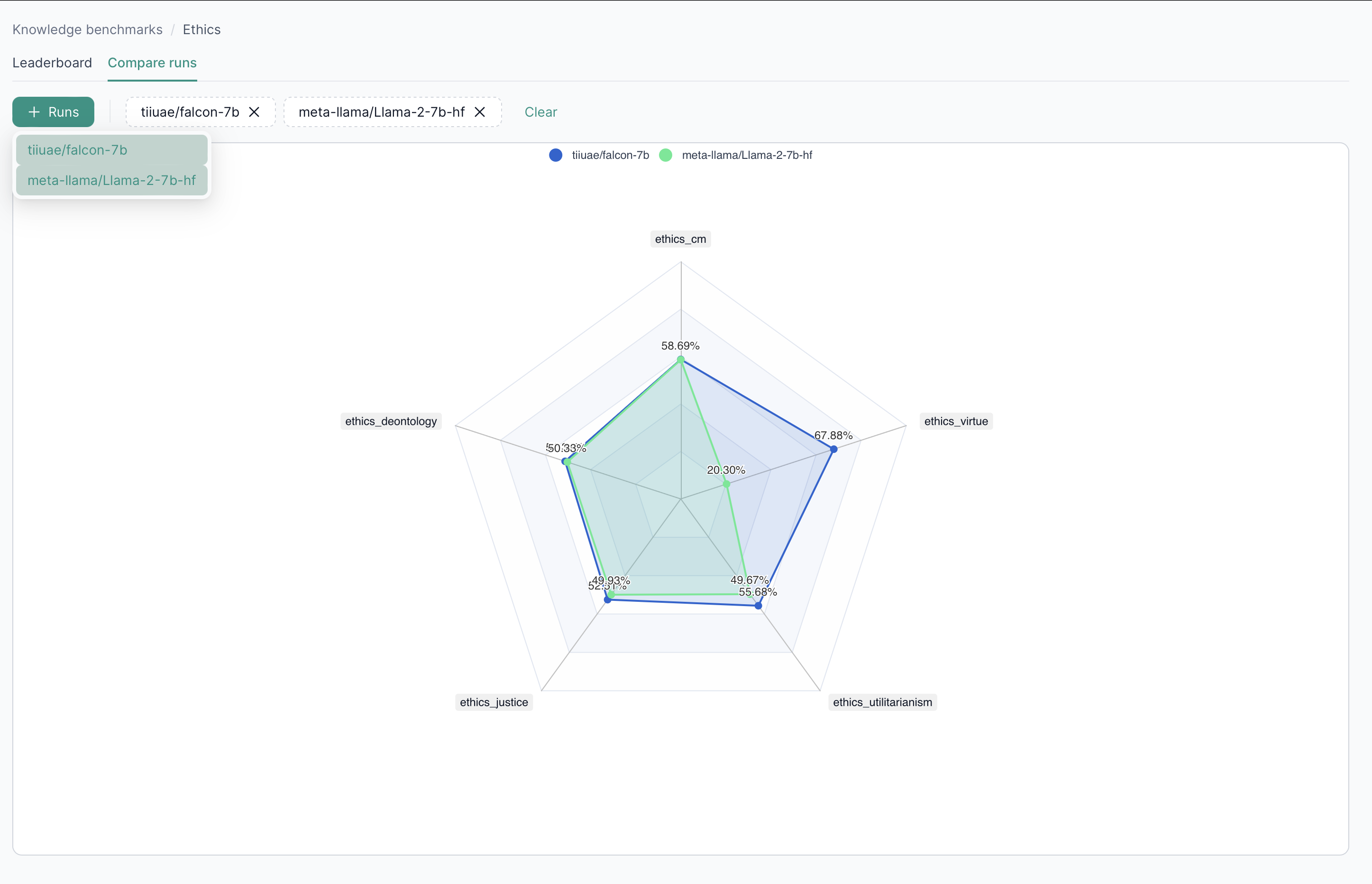

Compare runs

When you navigate to the Compare runs tab, you can select several runs that you want to compare.

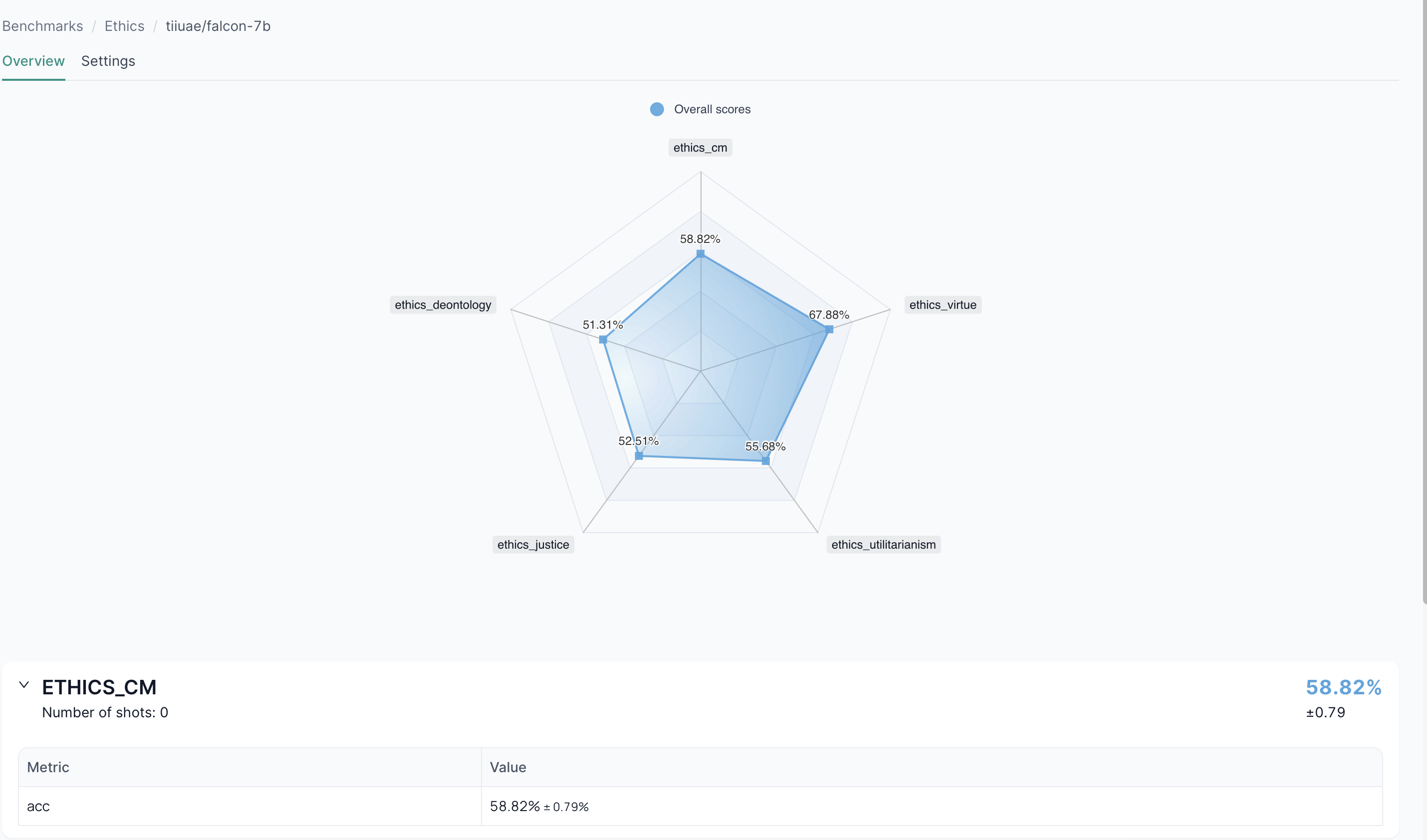

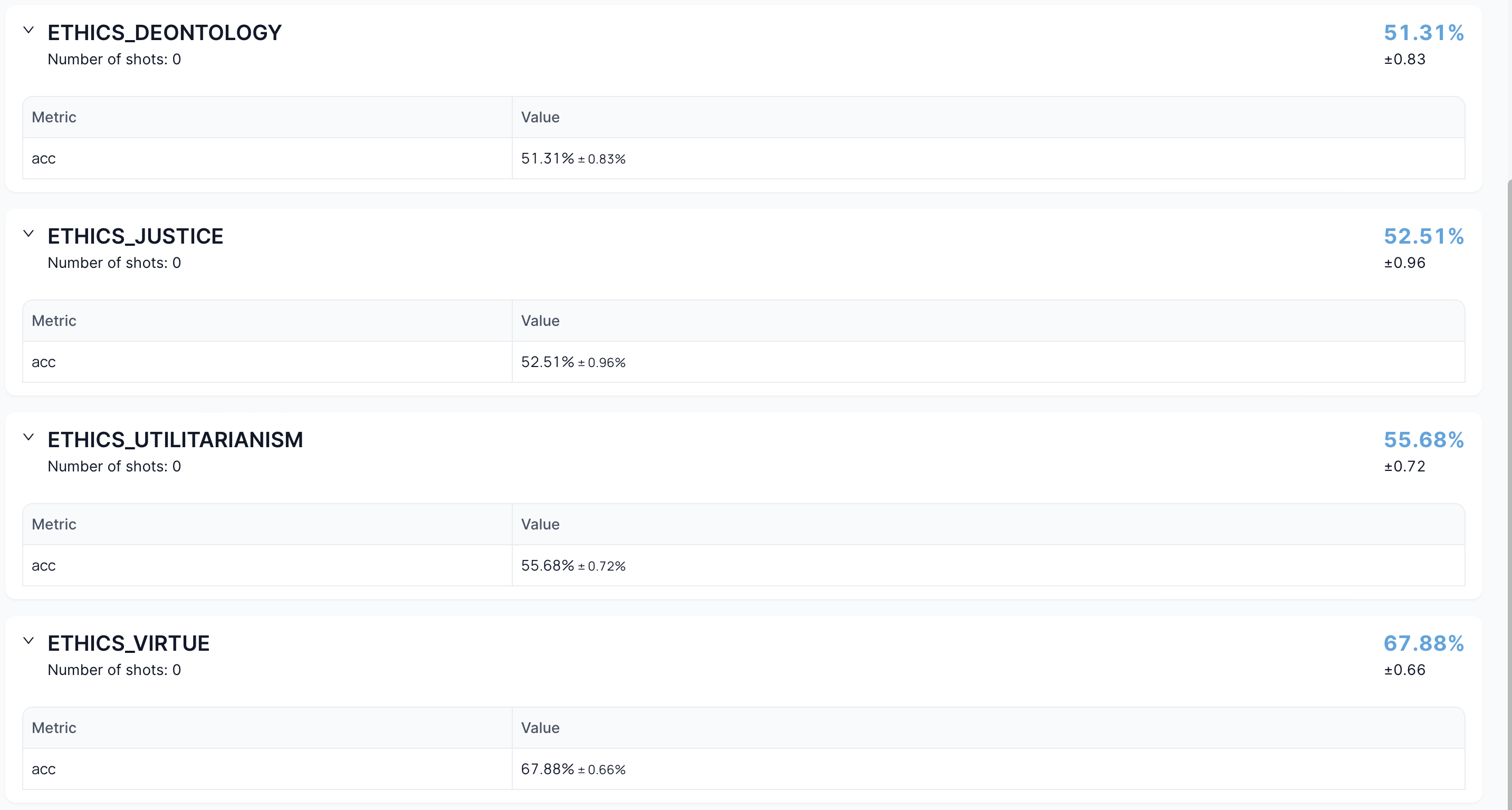

Benchmark run overview

After selecting a specific run, you will be directed to the run overview page where you can see all the details of the selected run.

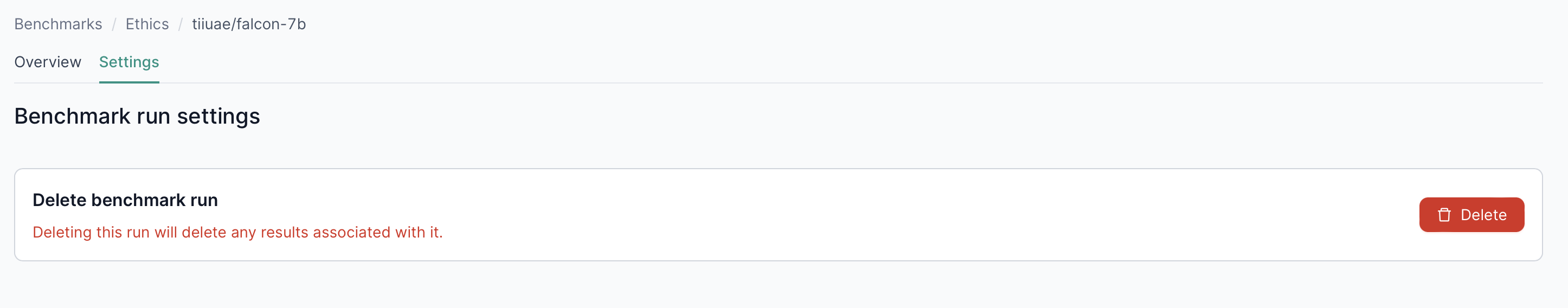

Benchmark run settings

To delete a specific run, select it, then navigate to the settings tab where you can find the delete button.

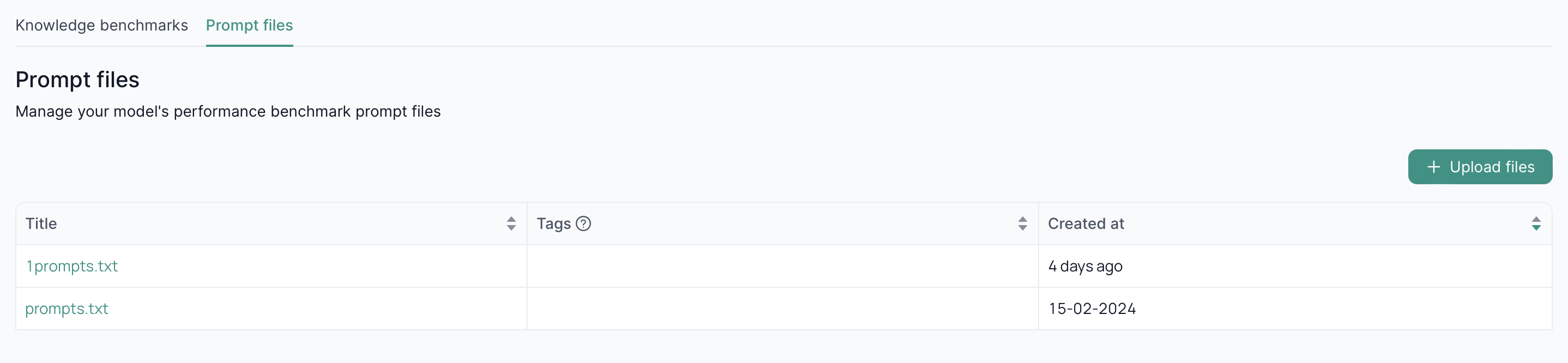

Prompt files

By selecting Benchmarks from the sidebar menu bar, and then navigating to Prompt files, you can display all the existing prompt files. These prompt files are used in the performance benchmark process.

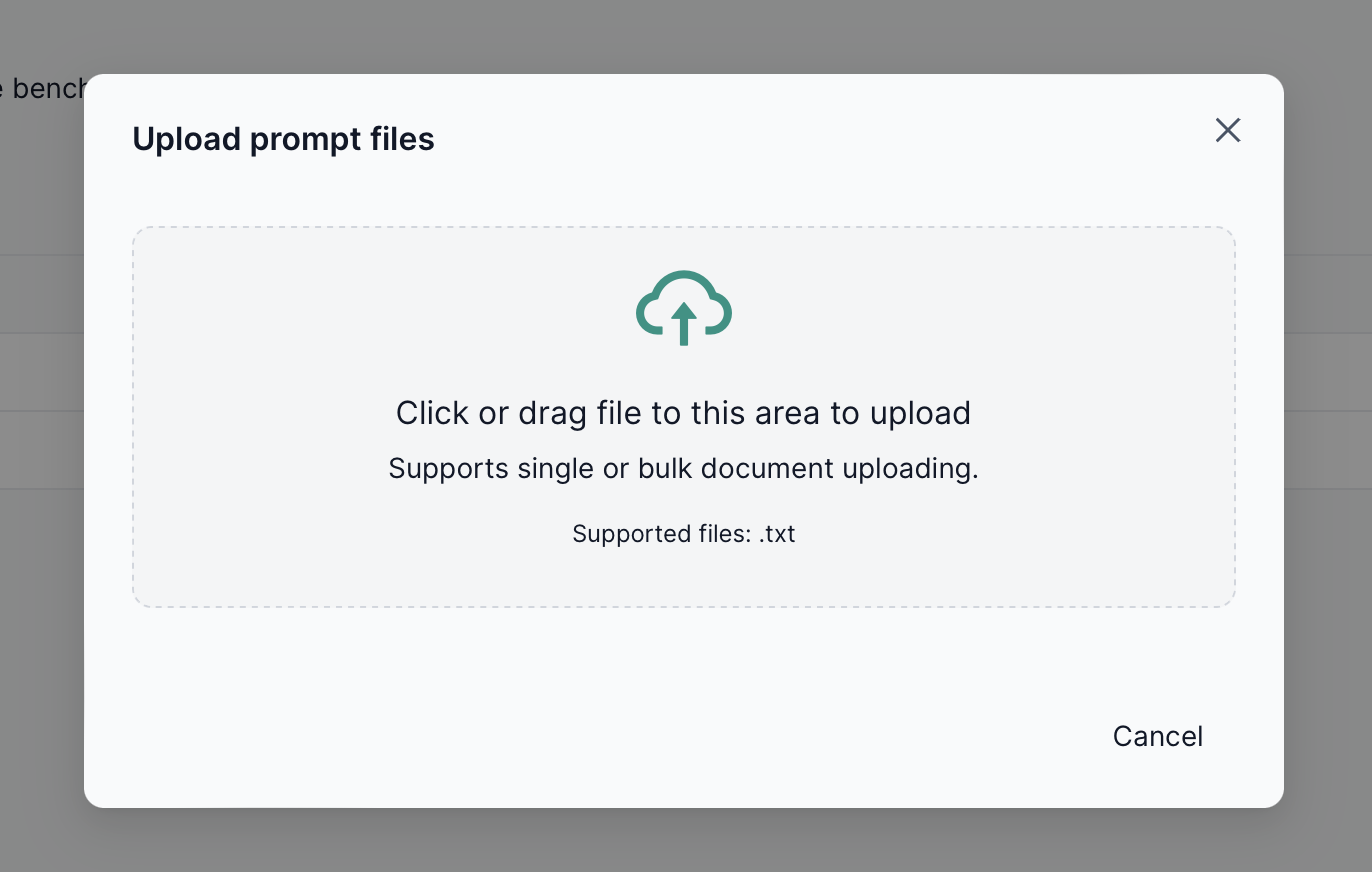

You can add any prompt file you want by clicking on Upload files.

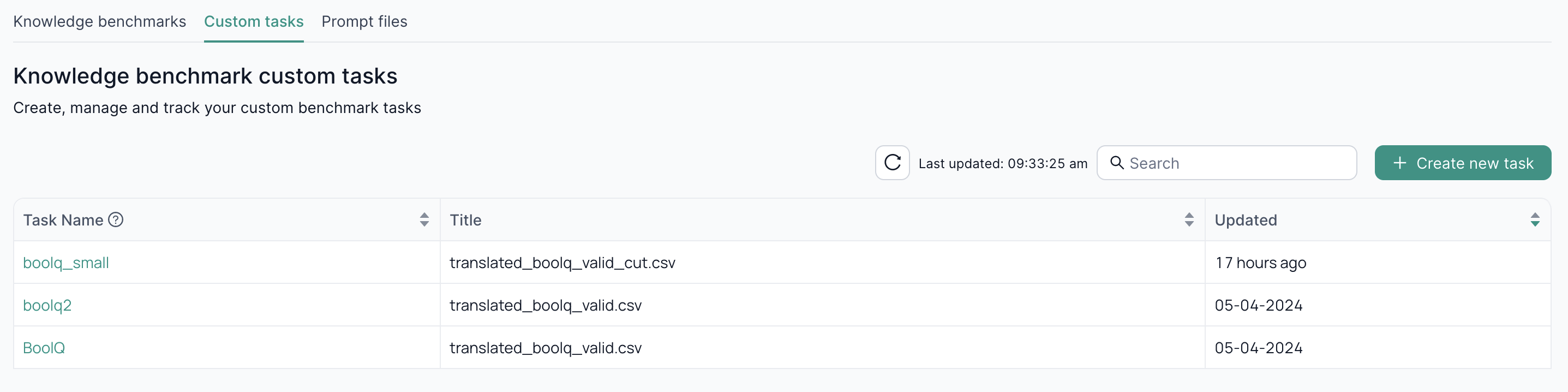

Custom tasks

The Custom tasks tab contains the user-defined benchmark tasks.

These tasks can be added to a benchmark package alongside the tasks provided out-of-the-box by the platform.

Creating a user-defined task

You can create a user-defined task by selecting Create new task and filling up the form.

General

Task name: the name of the task. Must be unique.Description: the task description.Dataset: the dataset file to use for the task. Supports CSV and json.Metrics: list of metrics to use to evaluate the task.Task Output Type: the type of the task.generate_until: generate text until the EOS token is reached.loglikelihood: return the loglikelihood probability of generating a piece of text given a certain input.loglikelihood_rolling: return the loglikelihood probability of generating a piece of text.multiple_choice: choose one of the provided options.

Prompting

To define a prompt fill-in:

Prompt Column: Prompt to feed to the LLM. Can be either a column in the dataset or a template.Answer Column: Expected answer. Can be either a column in the dataset or a template.Possible Choices: Possible choices when using themultiple_choicetask output type.Fixed Choices: Specifies whether the same set of choices (Possible Choices) is used for every prompt.

See Prompt examples for some examples.

Few-shots Configuration

You can configure the task to add some few-shots to the question prompt.

Number of few-shots: number of few-shots examples to add to the prompt.Few-shots description: a string prepended to the few-shots. Can be either a fixed string or a template.Few-shots delimiter: String to insert between the few-shots. Default is a blank line "\n\n".

Advanced

Repeat Runs: number of times each sample is fed to the LLM.Target delimiter: string added between question and answer prompt. Default is a single whitespace " ".

Prompt examples

Basic prompt

Assume we have a dataset in which a column is the prompt we want to feed to the LLM and another column is the expected answer, like

We can then configure our task with

- Prompt Column:

question - Answer Column:

answer

Template prompt

Assume we have a dataset like

passage,question,answer

In this document we describe a recipe to make bread,What is the text about?,Bread

And we want to generate the prompt

We can then configure our task with

- Prompt Column:

Text:\n{{passage}}\n\nQuestion: {{question}}\n\nAnswer: - Answer Column:

answer

Multiple choice prompt

Assume we have a dataset like

question,distractor1,distractor2,correct

Compounds that are capable of accepting electrons are called what?,redidues,oxygen,oxidants

We can then configure our task with

- Prompt Column:

question - Possible Choices:

distractor1distractor2correct

- Answer Column:

correct

Multiple Choice Prompt with Fixed Choices

Assume we have a dataset like:

We can then configure our task with

- Prompt Column:

text - Possible Choices:

angrysadhappy

- Answer Column:

label - Fixed Choices:

True